VMware vSphere – common myths debunked

Over the years, a number of common misconceptions about infrastructure Virtualization have taken hold and even gained widespread acceptance. I hope to factually debunk some of these myths, and possibly discover more myths in the process!

Myth #1

I have fewer than a dozen ESXi Hosts but I plan on breaking those up into several clusters of 3-6 Hosts each. I believe that I will have better control over resources by dividing my Hosts into multiple clusters and segmenting my Virtual Machines by category (VDI, DEV, etc.).

Reality #1

vSphere has always had a resource container known as a Resource Pool. This container is intended to divide and manage Compute resources within a cluster. In conjunction with separate Port Groups (VLANs) and Volumes, Resource Pools are the correct way to divide resources within a cluster.

The rational for configuring fewer, larger Clusters is simple: The unintended failure of one host represents a smaller change to the percentage of total Compute resources in a 12 Host cluster than it does in a smaller cluster of 3,4, or 6 Hosts.

Larger clusters have the following advantages:

- Greater failover capacity

- Ability to implement a more effective Admission Control Policy without defeating the cluster’s original purpose. Example: In a 12 Host cluster, Admission control could protect (provide for) 4 Host failures, while only setting aside (reserving) 25% of cluster resources.

- Services like DPM can be usefully implemented in larger clusters whereas DPM is useless in clusters of 3 Hosts and questionable in clusters of 4-6 Hosts. Example: Given a quorum of three Hosts, DPM might be able to power-down a single Host in a cluster of 4 Hosts. There is no reason; however, DPM could not effectively power-down 6 Hosts of a 12 Host cluster without violating availability constraints.

The valid reason for dividing larger numbers of hosts into several/many clusters relates to basic vSphere limits per ESXi: Not enough ports, not enough uplinks, too many VMs per Host.

Myth #2

I plan on installing vCenter with an External Platform Services Controller. I believe that this will provide a more resilient and better performing installation at my location.

Reality #2

External Platform Services Controllers have several advantages, especially when multiple vCenter Servers are going to be required. Make no mistake; the PSC (Embedded or External) is a single point of failure unless otherwise protected!

That means, Embedded PSC installations represent fewer single points of failure than External PSC installations.

Myth #3

I am going to choose a SAN from an old-school vendor for my installation because they have the best products. I believe that the old-school storage vendors have an inherently better product.

Reality #3

While old-school storage vendors may have been around awhile, so have their products. Many of the platforms being sold by the most well-known vendors are merely new plastic grills on the outside of fairly old products.

- Many of the best-known SAN/NAS products from some of the biggest vendors require a significant portion of spindles to be dedicated to the software/Operating System. That means you may pay for 25 spindles, but you may only get to use 21 of them!

- Many of the old-school vendors require continual license renewal in order to continue using hardware you have already purchased.

- Some common SANs do not even support a vSphere default configuration known as: Delayed ACK[1]

- Many vendors do not allow you to configure your own RAID groups (vdisks) on the SAN. This is not a deal-breaker on a true auto-tiering device, but should be avoided on any single-tier storage

Look for trusted products, listed on the VMware HCL, that provide the agility you desire at a value you can accept.

Myth #4

I believe SSH is a vulnerability. I plan on requiring authorized administrators to enable SSH and/or the ESXi shell, on a per-host and per-session basis, only when absolutely required. I believe this will conform to “Best Practices” and secure the environment.

Reality #4

The reality is SSH is an encrypted protocol by default! Moreover, if the vSphere Management Network is isolated and secure (only accessible by authorized administrators), then permanently allowing SSH for those same authorized administrators, is only a time-saving measure in the event of an emergency.

In a hypothetical situation where you are managing a vSphere cluster on which lives depend, and something goes wrong, do you really want to spend additional time enabling SSH in order to begin solving the problem?

What if you contract for IaaS resources and have to make an appointment for IPMI access to enable SSH? How long is that going to take?

Myth #5

When my users complain about slow performance in the environment, I typically add vCPU and RAM to each affected VM. I believe that adding vCPU to Virtual Machines improves the performance of those VMs.

Reality #5

The truth is, you are probably doing more harm than good by adding more than a handful (2-4) vCPUs to any one VM! Using 2 vCPUs by default with servers and advanced VDI workloads is all that is needed to support multi-threaded workloads.

Adding vCPUs (beyond 2 vCPU) to a Virtual Machine only improves performance when those additional resources are actually required by the server. Any vCPU resources over and above what is actually used by the VM will actually slow down every other VM running on that ESXi by causing unnecessary CO-STOP on the host.

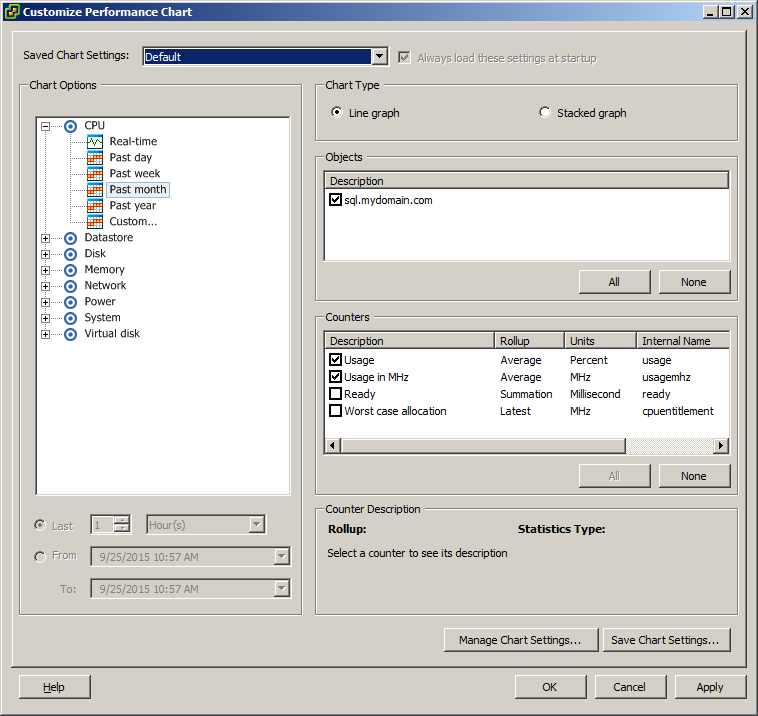

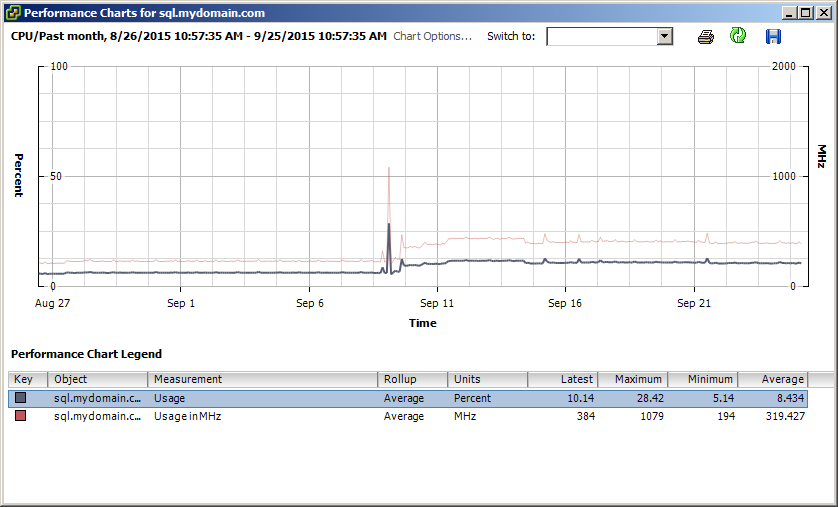

It’s easy to find and configure over-allocated vCPU’s using the vSphere Performance Charts for any VM in question.

- Select the VM and go to Performance. The default chart is for CPU, but it shows only 2 hours.

- Configure the chart to show whatever a full business cycle (Week/Month/Year) is and look at the maximum CPU utilization in percent.

- If the maximum utilization is less than 50%, it is very likely that there are too many vCPUs configured for the VM in question.

Myth #6

I believe vCenter should be installed on a physical server. How can vCenter be located on servers which it manages? I believe that if I loose vCenter, I will loose contact with everything!

Reality #6

This old myth has been disproved time and time again. Now, one of the most highly recommended methods of installing vCenter, using the VMware® vCenter™ Server Appliance™ can only be done on a VM!

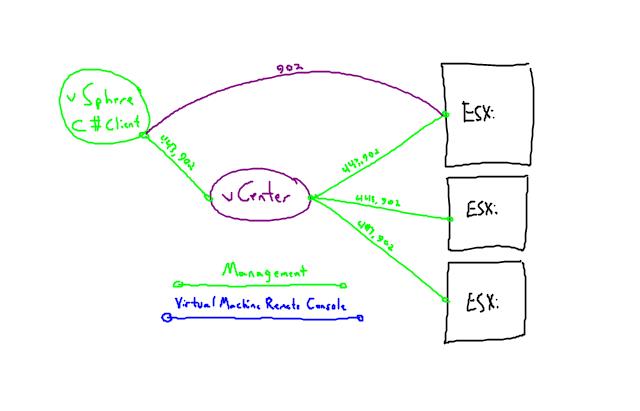

The truth is that vCenter does not tunnel anything. Rather, you connect to it (vCenter) and vCenter connects to ESXi Hosts. Even when you open a VMRC, that graphical data and user I/O is being transmitted between the ESXi Host where the Virtual Machine is located and the client directly. That’s why so many users experience MKS issues with the VMRC!

Although a functioning vCenter is required to configure HA in the first place, vCenter is not required for HA to function and re-start VMs in the event of a host failure! HA is configured by way of agents which are installed on each ESXi host that is a member of the Cluster. Even if the very ESXi Host that contained vCenter (Windows or VCSA) were to fail, in 15 seconds the HA agents on the remaining ESXi Hosts would act to re-start all of the VMs including vCenter.

- If you relied on a physical server to host vCenter, and that server were to fail for any reason; the best-case scenario would require user intervention to get vCenter back online.

- If you installed vCenter as a VM (even on the very cluster that it manages), then the minimum expectation would be that vCenter would be re-started by HA were it to be affected by a Host Failure

- Theoretically, with vSphere Enterprise or vSphere Enterprise Plus Editions, it would be possible to leverage FT to protect vCenter above and beyond the protections HA affords!

Besides, how could you maintain that vSphere is suitable to protect mission-critical workloads if it can’t even protect itself!

- http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1002598 ↑

You are so awesome for helping me solve this mystyre.