vCenter 6 Email configuration remains stuck in 1997

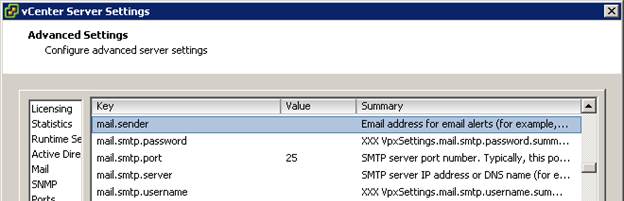

On the release of vCenter 6, I was personally very excited to see several email configurations which had not been previously possible. Listed under vCenter Server Settings > Advanced Settings were the following new keys: mail.smtp.password mail.smtp.username One might be led to believe that the ability to configure a SMTP username and password implied that

Read More »