Category: Operating Systems

Installing VMware Workstation Pro 14

VMware Workstation Pro 14 is a 64-bit Type 2 Hypervisor that is available for Linux and Windows. As a Type 2 Hypervisor, Workstation Pro runs as an application on top of a full Operating System like Windows 10 or Ubuntu Desktop and claims compute and hardware resources from the parent OS, then allocates those resources

Read More »

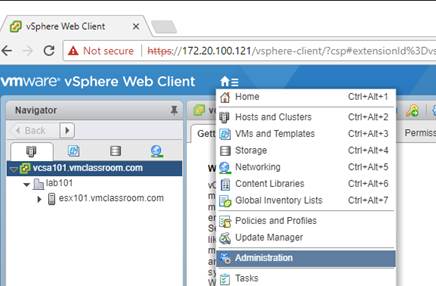

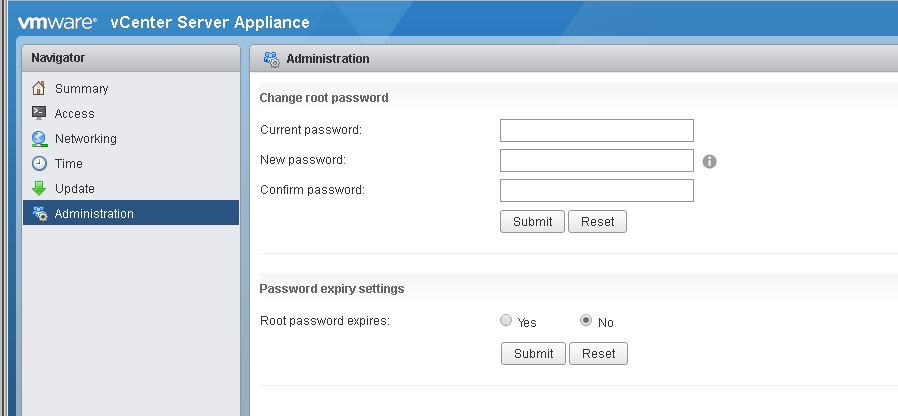

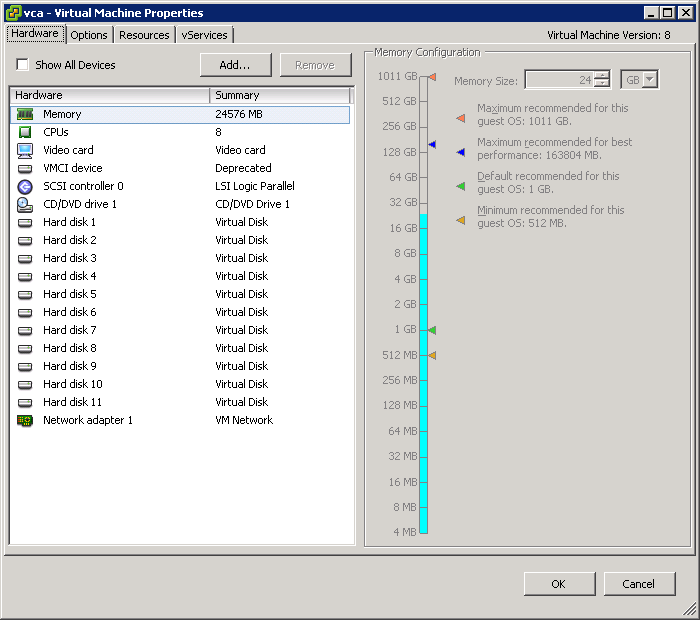

VCSA disks become full over time

I’ve recently spoken with a number of VMware vCenter Server Appliance 6 (VCSA) users that have had issues with the root filesystem of VCSA running out of space. This situation seems to be occurring more often now due to a combination of when the VCSA 6 went mainstream (18 to 24 months ago) and the

Read More »

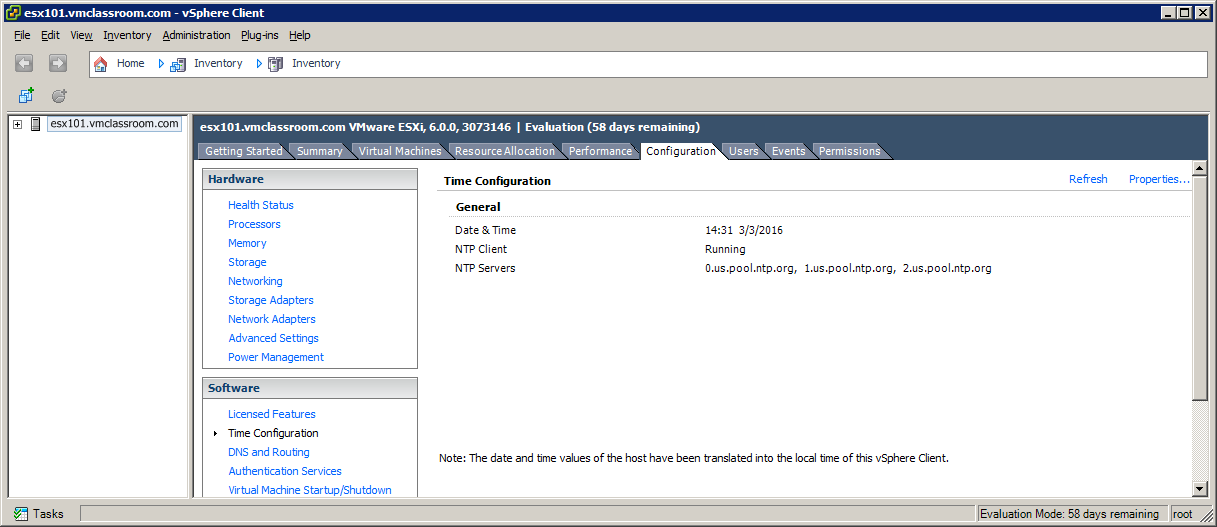

Timekeeping on ESXi

Timekeeping on ESXi Hosts is a particularly important, yet often overlooked or misunderstood topic among vSphere Administrators. I recall a recent situation where I created an anti-affinity DRS rule (separate virtual machines) for a customer’s domain controllers. Although ESXi time was correctly configured, the firewall had been recently changed and no longer allowed NTP. As

Read More »