VCSA and ESXi password security

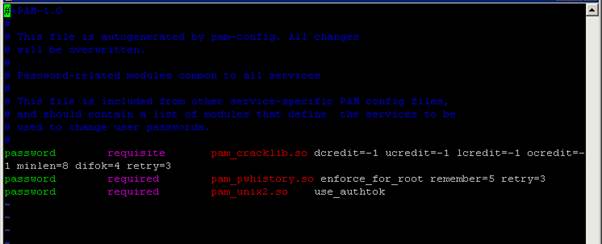

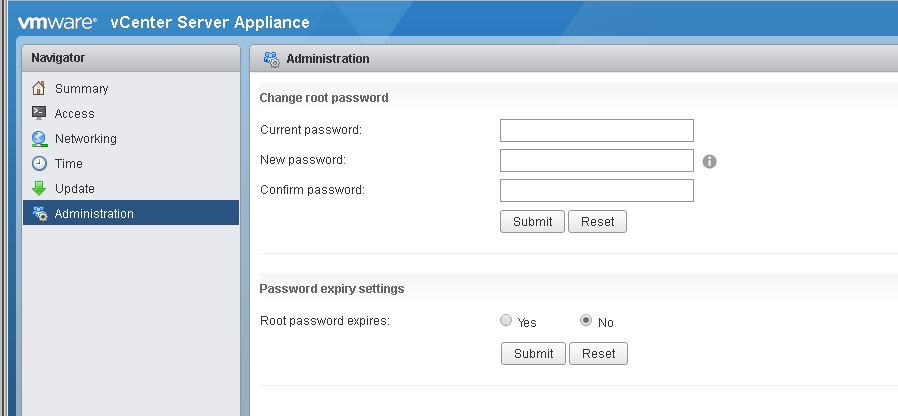

I recently went looking for information on password security for the VCSA 6.0 & 6.5 and ESXi 6.0 & 6.5. Most specifically, I was interest in the number of passwords remembered, so I could define that in documentation for a client. Try as I might, I couldn’t find documentation for VCSA number of passwords remembered

Read More »