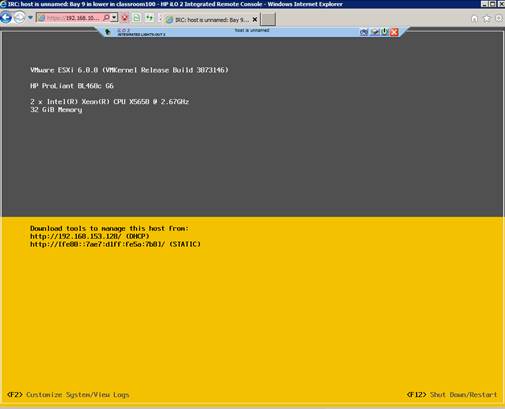

Revisiting scripted installation for ESXi 6.5

I thought I would revisit scripted ESXi installation for my lab. It’s been since 5.0 or prior since I actually went into depth on this and there are some significant changes for 6.5. The example script draws heavily from other sources and it is now working.